Does your data need a health check?

Take our free Data Health Assessment to evaluate your current data practices and discover ideas for improving your organization's data quality.

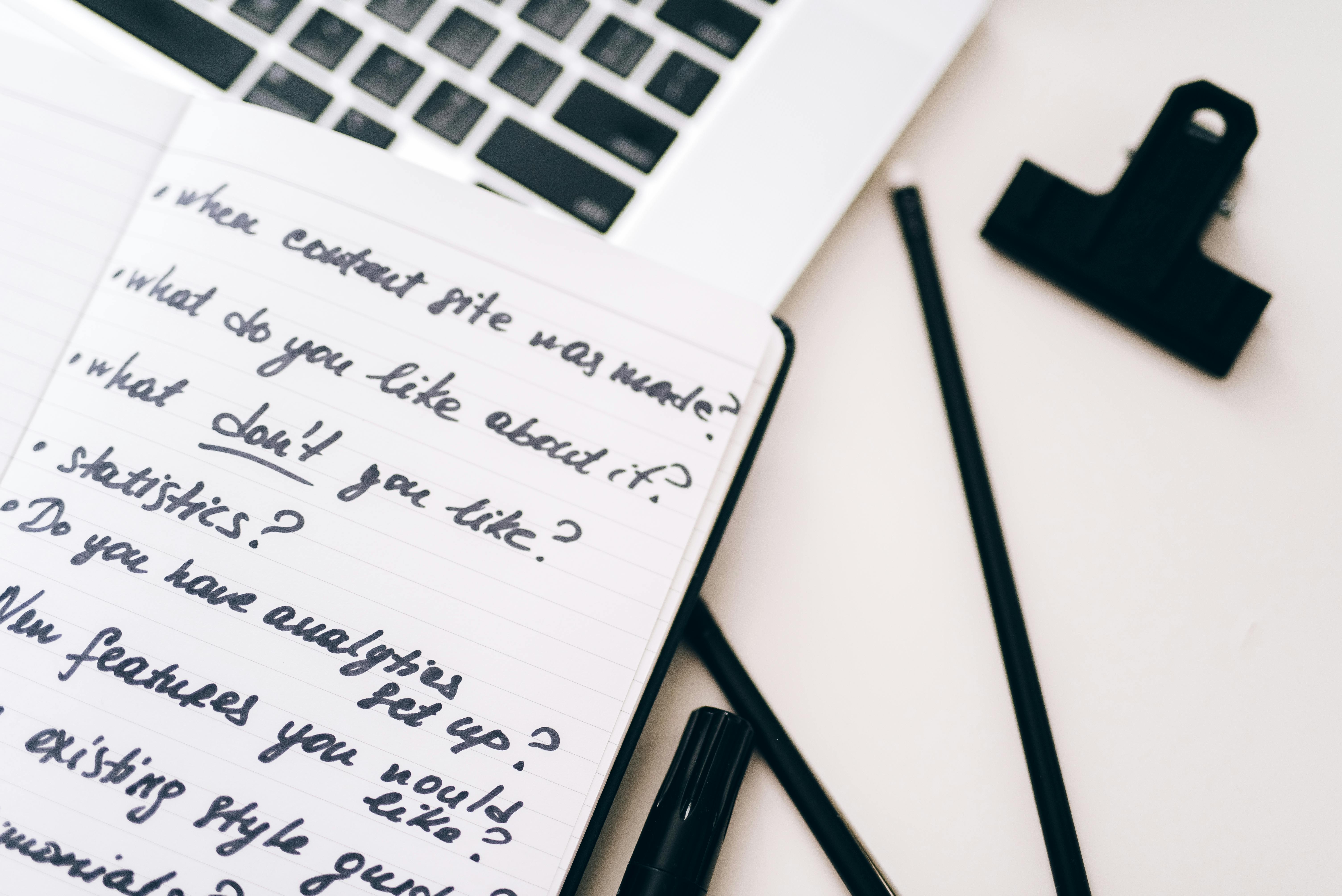

When you've been assigned to write up a survey, interview, or focus group questions, a blank page can be a terrifying thing. What should we ask? Do our questions make sense? How do we know if we're doing a good job?

Fear not, my friends! Here are my top tips for crafting questions based on over 15 years of writing and testing experience.

Before you start drafting questions, make sure you’re really clear on what you want to know from your audience. If you skip this step, you run the risk of asking vague, muddy questions that don’t produce any actionable information.

For example, let’s say you just put together this amazingworkshop for your colleagues about upcoming changes to state policy affectingyour organization. You send out a feedback survey that simply asks, “Rate thisworkshop out of five stars.” That will give you some general feedback, but youwon’t have any idea why some people gave it five while others gave it three.How can you improve your workshops in the future if you don’t know what wentwrong?

Instead, what if you took some time to think about thefeedback that would be most helpful for you and help you determine if theworkshop was successful? You might end up asking more specific questions about:

Spending some time to clarify whatyou want to know will help you craft more specific questions that get at the heart of what you’re trying to find out.

Something I seepretty often is people trying to ask their audiences multiple questions atonce. Here’s an example of a survey question that does this:

1. Did the facilitator create a safe and welcoming learning environment for students?

“Safe and welcoming environment” is a phrase that a lot ofus use to describe a setting that allows people to feel relaxed and can askquestions freely. But what if someone thought the facilitator welcomed them,but still didn’t feel comfortable asking questions or engaging in theconversation? How can they answer that question honestly?

As another example, I’ll sometimes see this in a list ofinterview or focus group questions:

2. Can you tell me about a time when you feltfrustrated at work? When did it happen? What was the cause? How did you react,and what steps did you take to resolve the problem?

Unfortunately, I’m still thinking about the first questionby the time you’re finished asking the fourth. Everyone processes verbalinformation at different speeds. Instead, ask one key question first (tell meabout a time you felt frustrated at work), let people tell you their story, andif there’s something you’d like to learn more about, ask one follow-up questionat a time.

You know what I’m talking about. Those phrases and acronyms that we love to throw around in the nonprofit sector that signal that we are nonprofit-y people doing nonprofit-y things: our CRMs, our RFPs, CFREs, our CTAs. Your nonprofit probably has a language of its very own. When I worked in public policy, my projects included the CTTF, JJDPA, STPP, and SCFR. What does any of that mean? I barely remember myself!

These phrases and acronyms sometimes find their way into oursurvey and interview questions, which makes sense. One, it’s how we talk, andtwo, we assume that everyone else talks like that, too. Don’t assume everyoneelse talks like that, too – even if you think that should (looking at you,board members). Spell out your acronyms, define your jargon, and give youraudience the best possible chance to provide you with accurate answers.

One thing we’re taught as professional researchers is that before you press send on your survey or start scheduling your focus groups, ask people to review your questions for clarity. For instance, you can send questions to your team and ask for their feedback, but be mindful that they may not be able to catch jargon issues, since they speak the same language as you. You can also send your questions to volunteers whoare a part of your audience, like program participants, donors, and partnerorganizations.

Finally, we do survey evaluations here at The Data Coach. You can send us your survey questions, and I’ll put them through a 20-point checklist for clarity, potential biases, and any privacy/confidentiality risks. You’ll get a revised survey with explanations for any changes, plus I’m available to answer any follow-up questions you might have. This is one of our most common service options, and the organizations we have worked with speak highly of the experience.

So that’s it – four tips for asking better questions for surveys, interviews, focus groups, and online forms. If you need additional help, or would like to learn more about our survey evaluations, please send me an email at lindsay@-the-data-coach.com.

Take our free Data Audit Checklist quiz to evaluate your current data practices and discover immediate improvement areas.

Take The Quiz